SURE-GB: Identifying Stereotypical, Under-REpresentational, and Algorithmic Gender Bias in Machine Translation

Abstract

SURE-GB aims to build an automated service that identifies occupation-related under-representational, stereotypical, and algorithmic gender bias in machine translation, targeting English and French and lower resource languages, i.e., Greek. The proposed method involves creating a curated knowledge graph that a) encodes standardised knowledge and data for occupations (based on data and hierarchies from EU-LFS, the ESS, and the International Classification of Occupations-ISCO), b) incorporates statistics for occupation-related gendered language usage derived from linguistic corpora. Our goal is to develop a ready-to-use machine learning toolkit, that utilises the above knowledge to detect and categorise gender biases for: a) providing actionable recommendations for improvement, b) establishing guidelines for unbiased language translation, and c) raising awareness of gender biases in machine translation systems.

Motivation

Gender bias in machine translation systems is a pervasive issue that compromises the accuracy and fairness of automated translations. Such biases can reinforce harmful stereotypes and contribute to gender inequality, particularly in the context of occupational terms. This problem is exacerbated when MT systems, widely used in diverse applications, systematically associate certain professions with specific genders.

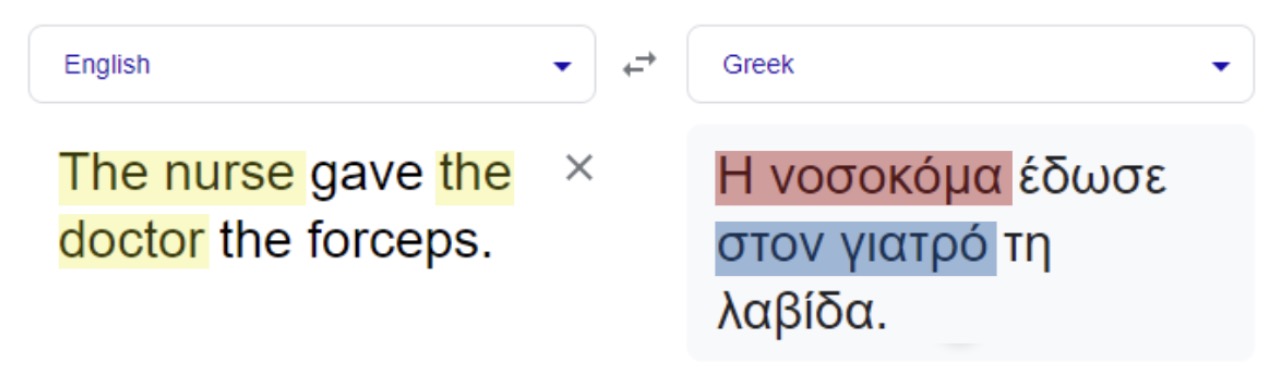

Consider the above example where “the doctor” without any gender indication, is translated into Greek as “ο γιατρός” (the male doctor), while “the nurse” is consistently rendered as “η νοσοκόμα” (the female nurse). This illustrates how MT systems can reinforce gender stereotypes by associating certain occupations predominantly with one gender. Such biases are not only misleading but also detrimental, as they perpetuate traditional gender roles and contribute to the gender disparities observed in various professional sectors. Addressing and mitigating these biases is critical to ensure that technology promotes gender equality rather than perpetuating discrimination.

Our motivation derives from two primary concerns: the persistent gender inequalities in the labour market and the existence of gendered algorithmic bias, as they are both highlighted in strategic social policy documents such as the European Commission’s Gender Equality Strategy 2020-2025 (EU Commission, 2020). The Commission emphasises the necessity of challenging gender stereotypes, which are fundamental drivers of gender inequality across all societal domains, and identifies gender stereotypes as significant contributors to the gender pay and pension gaps. Moreover, the Strategy places a specific focus on the impact of Artificial Intelligence, highlighting the need for further exploration of its potential to amplify or contribute to gender biases. Specifically, gender bias in machine translation systems is a significant element of this aspect.

Knowledge Graph

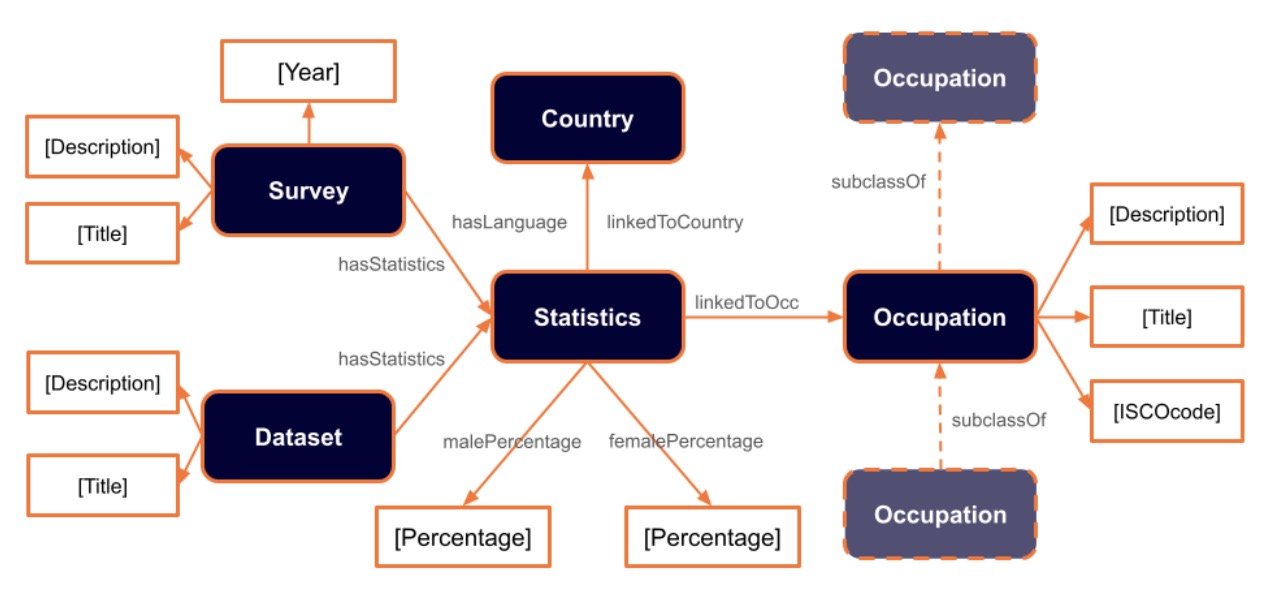

In this work, we have focused on employment data from the UK, Greece, and France, and we have extracted the respective statistics for English, Greek, and French, from the WMT dataset as well as a part of the C4 dataset. This extensive data collection enabled us to create the Knowledge Graph. By systematically integrating structured occupational classifications with comprehensive gender statistics, we have constructed a detailed and accurate representation of gender distribution across various occupations.

The Knowledge Graph serves as a resource for studying gender bias in machine translation systems and providing valuable insights into gender representation within different professional sectors. This comprehensive approach allows for a nuanced understanding of how occupations are “gendered” in both the actual labor market and the textual data used to train and evaluate MT systems. Beyond its utility for computer scientists and AI researchers, the Knowledge Graph is also of great interest to social researchers and scholars in fields like Science and Technology Studies (STS). It provides a robust tool for examining the intersection of technology and gender, offering a valuable resource for those aiming to address and mitigate gender biases in both technology and society.

The knowledge graph is fundamentally based on the International Standard Classification of Occupations (ISCO-08), which provides a hierarchical taxonomy of occupations. This hierarchy organizes occupations in our KG into broader and narrower categories linked through “subclassOf” relations. For example, “Professionals” (ISCO Code 2) includes “Health Professionals” (ISCO Code 22) as a subclass, which further branches into other occupations including “Medical Doctors” (ISCO Code 221) and “Nursing and Midwifery Professionals” (ISCO Code 222). Each occupation in the KG has a title, description, and ISCO code, all extracted from the ISCO-08 standard.

The Knowledge Graph also integrates comprehensive statistical data about gender representation in various occupations. This integration is achieved through “Statistics” entities, which link occupations to gender statistics. Each “Statistics” entity includes two key attributes: malePercentage and femalePercentage. These percentages indicate the proportion of male and female workers in a given occupation or the respective proportion of masculine and feminine mentions of occupations in textual corpora.

The “Statistics” entities are connected to either a “Dataset” entity or a “Survey” entity, depending on the source of the data. Each “Dataset” entity includes a title and description, reflecting the dataset’s content. If the statistics are derived from a survey, the “Survey” entity also includes a title, description, and the year or time period of the survey.

Furthermore, each “Statistics” entity is linked to a “Country” entity, providing contextual information about the geographical origin of the data or the language of the textual corpora respectively. When the statistics are linked to a dataset, the relationship is represented by the “hasLanguage” relation, indicating the language of the analyzed texts. Conversely, if the statistics are from a survey, the “linkedToCountry” relation specifies the country from which the survey data originated and refer to.

Bias Detection System

A major part of this work involves the techniques employed to extract suitable concepts in language -in our case related to jobs-, identify the underlying gender and resolve possible biased translation. Specifically, we combine statistics from real-world labor data and textual corpora datasets to create a comprehensive resource for analyzing gender bias in machine translation. To accomplish this, we used various sources, including national and European statistical agencies and databases, to obtain accurate and up-to-date labor statistics, as already mentioned above. Additionally, we developed a pipeline to extract gender statistics from textual datasets, ensuring a thorough analysis and integration of diverse data sources.

The proposed methodology to be employed can be divided into four distinct subtasks:

- Subtask 1: Detect Occupations

- Subtask 2: Link Occupations with the SURE-GB Knowledge Graph

- Subtask 3: Detect the Gender of each occupation in the textual corpora

- Subtask 4: Compare the Gender Representation of Occupations between the Source and Translated

Subtask 1: Detect Occupations

For the Occupation Extraction module, we employed a Large Language Model (LLM) to detect occupations in a given text. The LLM was instructed in a zero-shot prompting manner to identify occupations from the text, along with their respective contextual references and corresponding descriptions. The latter facilitated matching the identified occupations from the Knowledge Graph as well as mitigating LLM hallucinations.

As an illustration, consider the following example of an input sentence and the respective output of the Occupation Extraction module (this example was created with the Llama-70b model as our deployed LLM):

Input:

The doctor put the cast on my leg while talking to the nurses about his new car.

Output:

Occupation title: Doctor Appearing in text as: doctor

Description: A medical professional who diagnoses and treats illnesses and injuries.

Occupation title: Nurse

Appearing in text as: nurses

Description: A healthcare professional who assists doctors and provides hands-on care to patients

We experimented with multiple LLMs including Llamma2-7b, Llama2-13b, Llama2-70b, Mistral-7b-v0.1, Mixtral-8×70-v0.1, TowerBase-7B-v0.1, and Meltemi-7B-v1.

We identified two primary forms of hallucinations and addressed them separately.

- The first form involves the LLM detecting occupations that are not present in the text. To address this, we asked the LLM to provide the in-text form of the detected occupations along with their titles and descriptions. We then used fuzzy string matching to verify that these detected terms were indeed part of the input text. If a detected term did not match any words in the input text above a certain threshold, it was disregarded as a hallucination.

- The second form of hallucination occurs when the LLM incorrectly identifies non-occupational terms as occupations. This issue was particularly prevalent with smaller models and in cases where no occupations were present in the input text. We addressed this form of hallucination using the second module of our pipeline, which is described below.

Subtask 2: Align Occupations with Knowledge

To ensure the occupations detected by the Large Language Model in the first stage align with the Knowledge Graph that is curated by domain specialists, we implemented a linking module. Since the KG is based on ISCO-08, which includes not only an occupation taxonomy but also descriptions for each occupation, we framed this task as a retrieval problem. The descriptions generated by the LLM for each detected job title are used to retrieve the most closely matching occupation from the KG.

To accomplish this, we converted both the descriptions of each occupation in the KG and those generated by the LLM into embeddings. Following the approach proposed by \cite{li2023angle}, we utilized angle-based embeddings to map the descriptions into a latent space where they can be easily compared. We then used cosine similarity as the distance metric to find the closest matching descriptions.

In the following example, you can see the occupations of the knowledge graph that matched the detected occupations of the previous step.

Doctor → Medical Doctor (ISCO code: 221): Medical doctors (physicians) study, diagnose, treat, and prevent illness, disease, injury, and other physical and mental impairments in humans through the application of the principles and procedures of modern medicine. They plan, supervise, and evaluate the implementation of care and treatment plans by other health care providers, and conduct medical education and research activities.

Nurse → Nursing and midwifery professional (ISCO code: 222): Nursing and midwifery professionals provide treatment and care services for people who are physically or mentally ill, disabled or infirm, and others in need of care due to potential risks to health including before, during and after childbirth. They assume responsibility for the planning, management, and evaluation of the care of patients, including the supervision of other health care workers, working autonomously or in teams with medical doctors and others in the practical application of preventive and curative measures.

By setting a similarity threshold, we can effectively filter out hallucinations where a detected term is misidentified as an occupation. If the similarity between the LLM-provided description and any existing occupation description in the KG falls below this threshold, the detected occupation is disregarded.

This retrieval and embedding-similarity approach helps us ensure that only valid occupations, as defined in our curated KG, are considered, thereby addressing potential hallucinations from the initial detection stage. By rigorously matching descriptions, we maintain the accuracy and reliability of the occupation data integrated into the Knowledge Graph.

Subtask 3: Detect the Gender of each occupation in the textual corpora

The final and most challenging part of our pipeline is the gender identification module. This module aims to identify the gender of an occupation in the text or conclude that the gender cannot be determined from the context. By doing this, we can calculate gender statistics for the occupations detected and matched with the knowledge graph in the previous stages and ultimately incorporate these statistics into the Knowledge Graph.

We identified three distinct cases for deriving the gender of an occupation, which we investigate stepwise. If one case determines the gender, we do not proceed to the next steps. The first case occurs when the occupation word itself indicates gender. This is common in notional gender languages such as English as well as grammatical gender languages such as Spanish, French, and Greek, where variations in words often signify gender (e.g. waiter/waitress, or in Greek “νοσοκόμος” for a male nurse and “νοσοκόμα” for a female nurse). We use the SpaCy library to automatically detect if a word has a gender indication.

If the occupation word does not indicate gender, we proceed to the second case, where gender is directly mentioned through pronouns. For example, in the sentence “He is a nurse”, the pronoun “He” directly indicates the gender of the nurse. To identify such cases, we construct the syntactic dependency tree using SpaCy and check for any direct links from a gendered pronoun to the occupation.

If neither the occupation word nor direct pronouns indicate gender, we move to the third case: gender indication through coreference. Consider the text, “Today the doctor came to the hospital 45 minutes late. Consequently, his first appointment had already left.” Here, the gender of “doctor” is inferred from the pronoun “his” in the second sentence. For this, we use the Coreferee library to find all linguistic expressions (also called mentions) in the given text that refer to the same entity, here the occupation of interest. We then check the gender of the words and pronouns linked to the occupation. If we find a gender indication, we determine the occupation’s gender; if not, we conclude that the gender cannot be determined from the text and exclude this detection from our statistics. Consider the example below that follows the previous examples, and illustrates the output of the Gender Identification module.

Doctor → Masculine (Coreference)

Nurse → Gender not clear

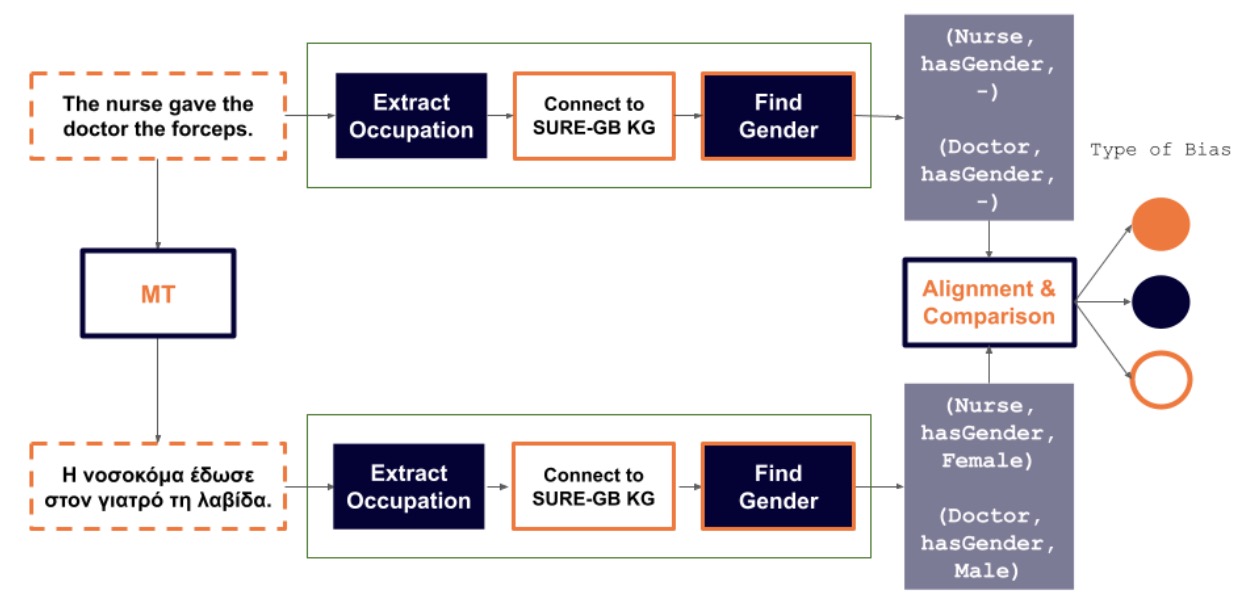

Subtask 4: Compare the Gender Representation of Occupations between the Source and Translated

In this phase of our methodology, we begin by establishing a mapping to identify correspondences between words or occupations in the source language and their equivalents in the target language. For instance, the English word “doctor” corresponds to “γιατρός” in the translated text. Once these correspondences are set, we apply the gender identification techniques described in subtask 3. This step involves analyzing the gender traditionally associated with specific occupations and terms to detect any discrepancies or mismatches in gender representation across languages.

Following this, we utilize the statistics provided in the knowledge graph, to pinpoint the origins of these biases. By referencing a predefined set of criteria, we assess and categorize the nature of these biases. This structured analysis enables us not only to detect but also to understand the underlying causes of gender biases in machine translations, ensuring a more equitable and accurate representation across languages.

Bias Types

Upon the detection of a gender mismatch between the source and the generated text, the algorithm would then classify it into three categories of potentially harmful gender bias:

- Under-representational bias, when the gender assignment is aligned with the low representation of a specific gender in linguistic corpora, that is consistent with the reality (e.g. “the plumber” is translated as masculine, and very rarely plumbers are women)

- Stereotypical bias occurs when gender assignment aligns with negative generalisations resulting from an imbalance in linguistic corpora, rather than reflecting reality. For example, “the doctor” may be translated as masculine due to its predominant use as masculine in linguistic corpora, despite the equal possibility of doctors being women.

- Algorithmic bias, when the gender assignment is possibly due to a machine translation algorithmic failure (not grounded to a low representation of a specific gender in linguistic corpora).

Team

PhD student at the AILS lab of NTUA

PhD student at the AILS lab of NTUA

PhD student at the AILS lab of NTUA

PhD student at the AILS lab of NTUA

Adjunct Lecturer of Social Statistics at Panteion University of Social and Political Sciences

Associate Professor of Sociology-Methods and Techniques of Social Research at NKUA

Professor of Social Statistics at Panteion University of Social and Political Sciences

Professor and director of the AILS lab of NTUA

Video Presentation

Publications

GOSt-MT: A Knowledge Graph for Occupation-related Gender Biases in Machine Translation

Orfeas Menis Mastromichalakis, Giorgos Filandrianos, Eva Tsouparopoulou, Dimitris Parsanoglou, Maria Symeonaki, Giorgos Stamou

KG-STAR’24: Workshop on Knowledge Graphs for Responsible AI colocated with 33rd ACM CIKM Conference, October 25, 2024, Boise, Idaho

Code

https://github.com/ails-lab/Sure-GB

Contact

Acknowledgment

This project is funded as part of an FSTP call from the EU project UTTER (Unified Transcription and Translation for Extended Reality), supported by the European Union’s Horizon Europe programme under grant agreement No. 101070631. UTTER leverages large language models to build the next generation of multimodal eXtended reality (XR) technologies for transcription, translation, summarisation, and minuting, with use cases covering personal assistants for meetings that can improve communication in the online world and advanced customer service assistant to support global markets. Learn more at he-utter.eu.